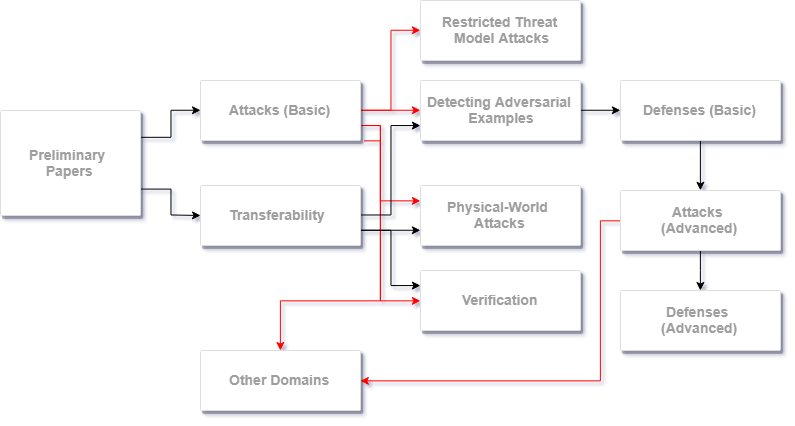

Post 0: Adversarial Machine Learning Paper Reading Challenge

Day 0 of Paper Reading Challenge foucssinng on Adversarial Machine learning

Preliminary Papers

- [ ] Evasion Attacks against Machine Learning at Test Time

- [ ] Intriguing properties of neural networks

- [ ] Explaining and Harnessing Adversarial Examples

Attacks [requires Preliminary Papers]

- [ ] The Limitations of Deep Learning in Adversarial Settings

- [ ] DeepFool: a simple and accurate method to fool deep neural networks

- [ ] Towards Evaluating the Robustness of Neural Networks

Transferability [requires Preliminary Papers]

- [ ] Transferability in Machine Learning: from Phenomena to Black-Box Attacks using Adversarial Samples

- [ ] Delving into Transferable Adversarial Examples and Black-box Attacks

- [ ] Universal adversarial perturbations

Detecting Adversarial Examples [requires Attacks, Transferability]

- [ ] On Detecting Adversarial Perturbations

- [ ] Detecting Adversarial Samples from Artifacts

- [ ] Adversarial Examples Are Not Easily Detected: Bypassing Ten Detection Methods

Restricted Threat Model Attacks [requires Attacks]

- [ ] ZOO: Zeroth Order Optimization based Black-box Attacks to Deep Neural Networks without Training Substitute Models

- [ ] Decision-Based Adversarial Attacks: Reliable Attacks Against Black-Box Machine Learning Models

- [ ] Prior Convictions: Black-Box Adversarial Attacks with Bandits and Priors

Physical-World Attacks [reqires Attacks, Transferability]

- [ ] Adversarial examples in the physical world

- [ ] Synthesizing Robust Adversarial Examples

- [ ] Robust Physical-World Attacks on Deep Learning Models

Verification [requires Introduction]

- [ ] Reluplex: An Efficient SMT Solver for Verifying Deep Neural Networks

- [ ] On the Effectiveness of Interval Bound Propagation for Training Verifiably Robust Models

Defenses (2) [requires Detecting]

- [ ] Towards Deep Learning Models Resistant to Adversarial Attacks

- [ ] Certified Robustness to Adversarial Examples with Differential Privacy

Attacks (2) [requires Defenses (2)]

- [ ] Obfuscated Gradients Give a False Sense of Security: Circumventing Defenses to Adversarial Examples

- [ ] Adversarial Risk and the Dangers of Evaluating Against Weak Attacks

Defenses (3) [requires Attacks (2)]

- [ ] Towards the first adversarially robust neural network model on MNIST

- [ ] On Evaluating Adversarial Robustness

Other Domains [requires Attacks]

- [ ] Adversarial Attacks on Neural Network Policies

- [ ] Audio Adversarial Examples: Targeted Attacks on Speech-to-Text

- [ ] Seq2Sick: Evaluating the Robustness of Sequence-to-Sequence Models with Adversarial Examples

- [ ] Adversarial examples for generative models

Visualized using Adversarial Machine Learning Reading List by Nicholas Carlini — Link

Key Researchers their affiliations:

- Nicholas Carlini (Google Brain)

- Anish Athalye (MIT)

- Nicolas Papernot (Google Brain)

- Wieland Brendel (University of Tubingen)

- Jonas Rauber (University of Tubingen)

- Dimitris Tsipras (MIT)

- Ian Goodfellow (Google Brain)

- Aleksander Madry (MIT)

- Alexey Kurakin (Google Brain)

Research Labs :

- Bethge Lab : http://bethgelab.org/

Frameworks/Libraries:

- cleverhans- Tensorflow

- foolbox — Keras/Tensorflow/Pytorch

- advertorch — Pytorch